I am a 1st-year M.Phil. student at HKU IDS advised by Yingyu Liang and Andrew F.Luo. Before that I obtained my B.S. degree in Mathmatics from Middle Tennessee State University in 2025. My interests focus on the mathematical principles underlying large language models (LLMs) and general intelligence, including representation, optimization, generalization, and reasoning. I truly enjoy exchanging research ideas and discussing.

I am actively seeking industry opportunities of 2026 Summer. Please feel free to reach me at b0chen AT connect DOT hku DOT hk to explore potential collaboration opportunities.

Publications

* denotes equal contribution or alphabetical order.

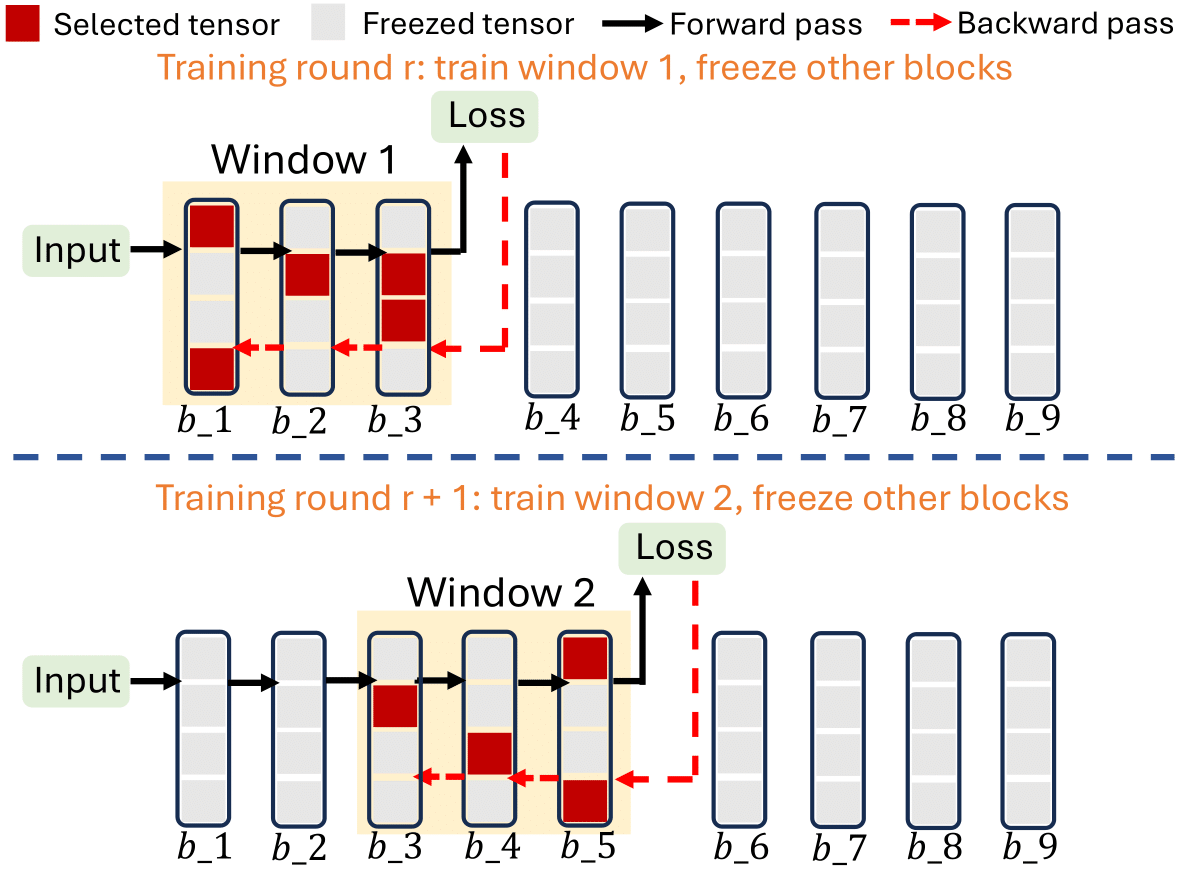

FedEL: Federated Elastic Learning for Heterogeneous Devices

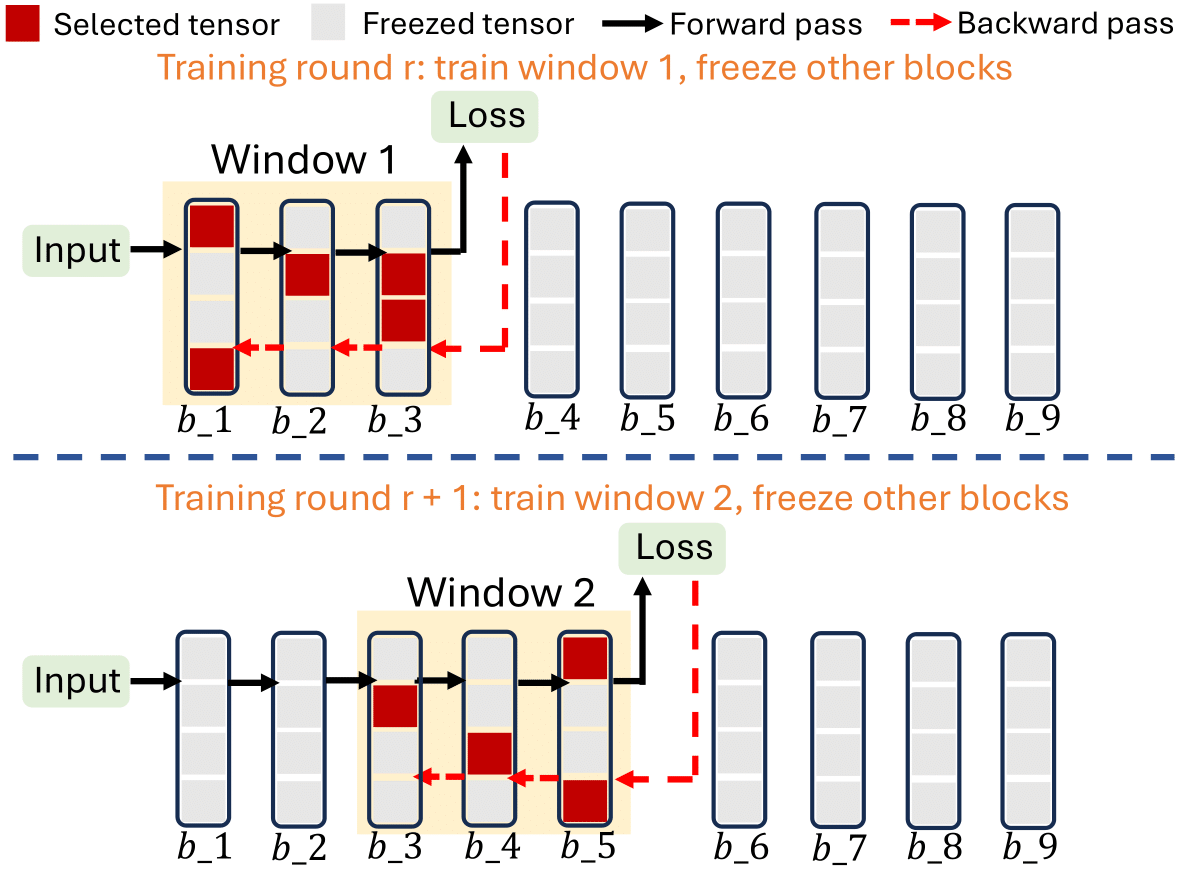

Circuit Complexity Bounds for RoPE-based Transformer Architecture*

High-Order Matching for One-Step Shortcut Diffusion Models*

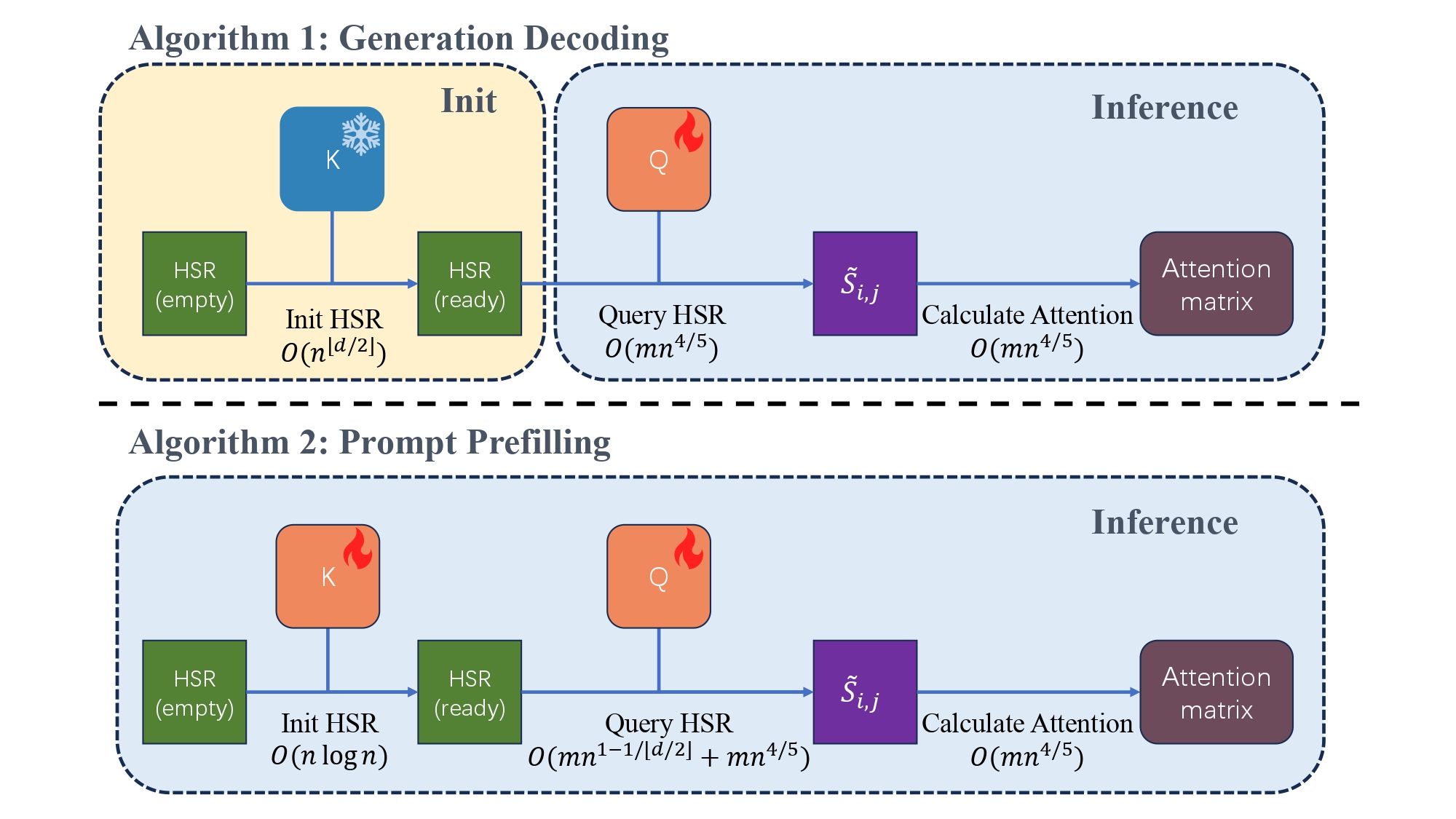

Hsr-enhanced Sparse Attention Acceleration*

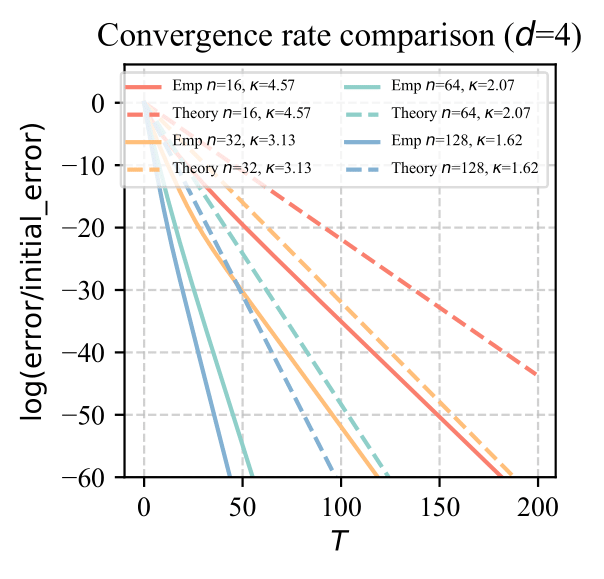

Bypassing the Exponential Dependency: Looped Transformers Efficiently Learn In-context by Multi-step Gradient Descent*

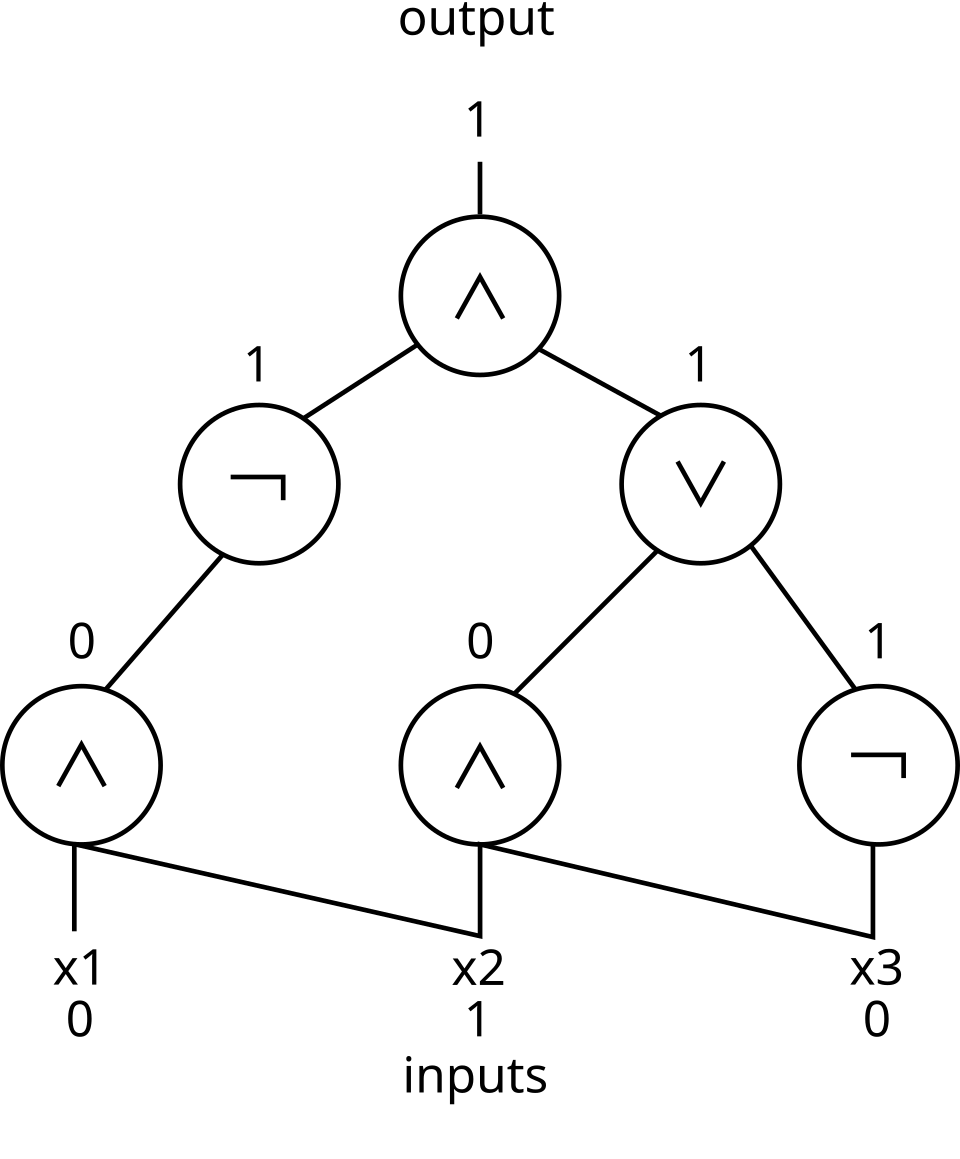

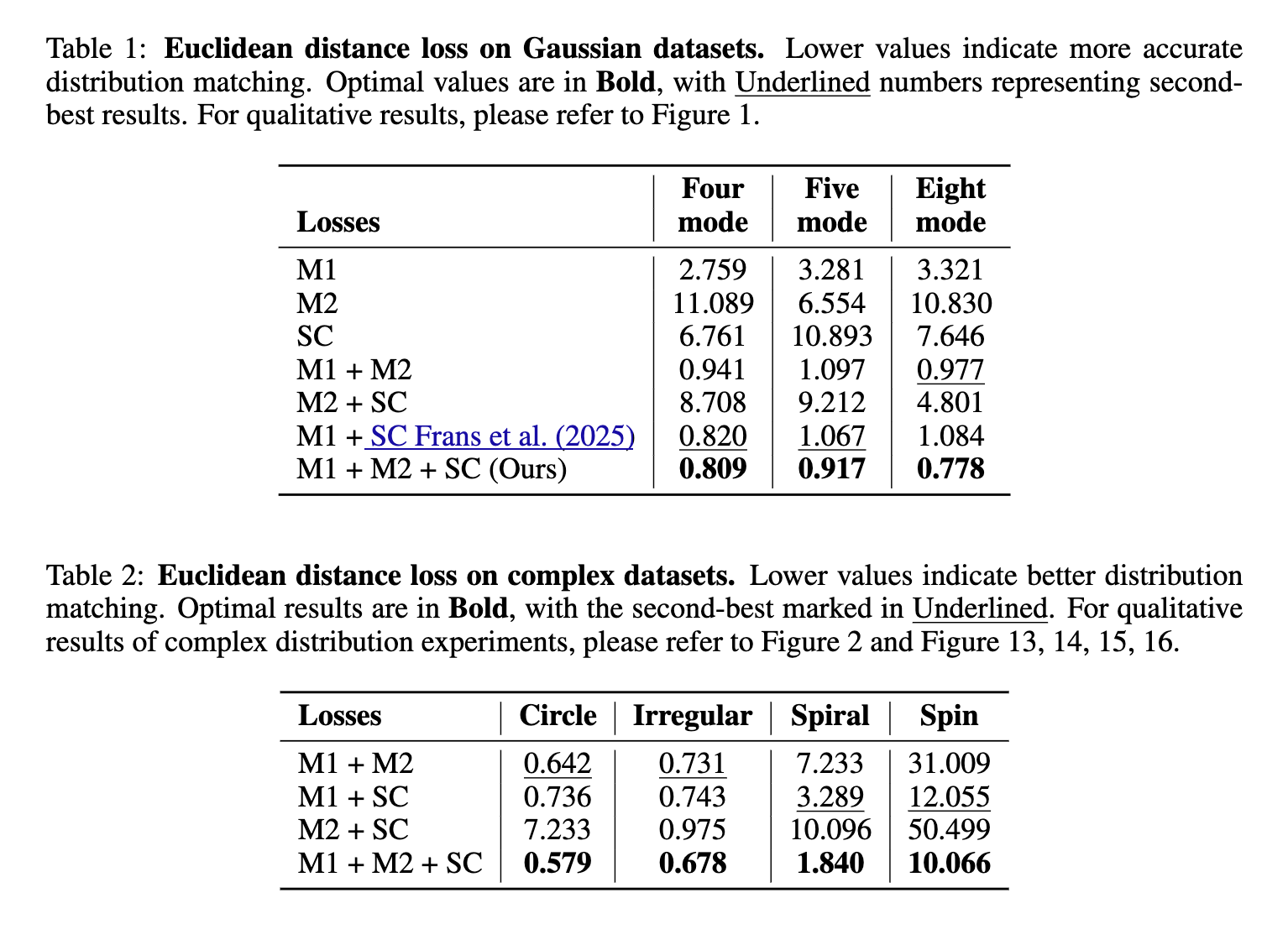

Force Matching with Relativistic Constraints: A Physics-Inspired Approach to Stable and Efficient Generative Modeling*

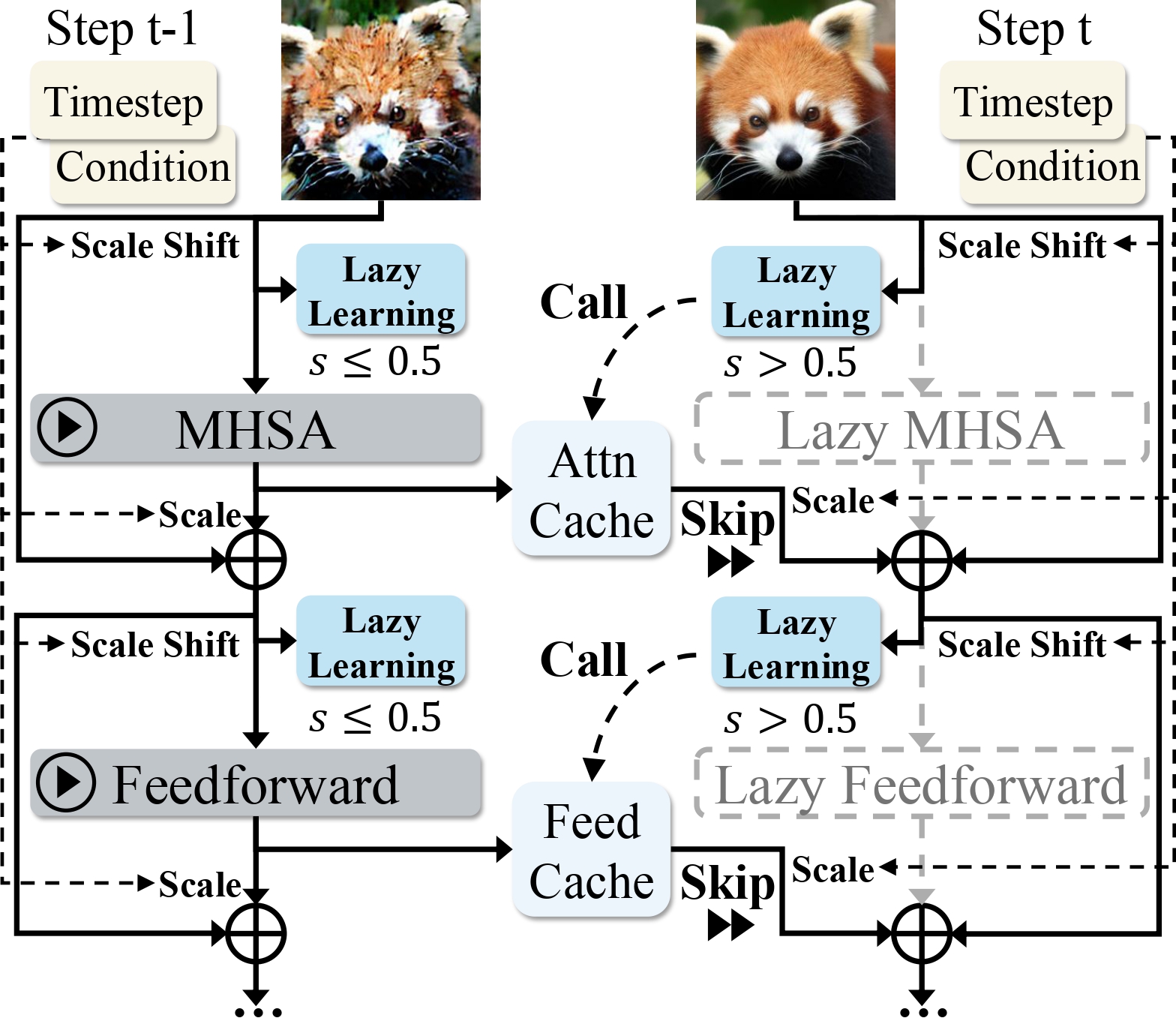

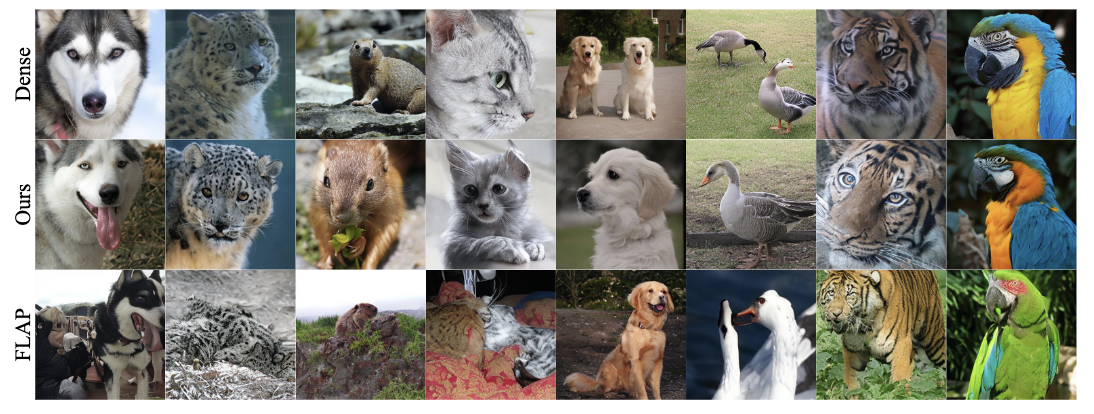

LazyDiT: Lazy Learning for the Acceleration of Diffusion Transformers

Numerical Pruning for Efficient Autoregressive Models